Understanding the Answers Algorithm

Authors: Pierce Stegman, Data Scientist & Michael Misiewicz, Data Science Manager

Product: Answers

Blog Date: January 2021

Yext Answers uses natural language understanding to interpret your customers' intent and deliver the most relevant search results. We built this Understanding the Answers Algorithm site so that you can see how the algorithm works and try it out for yourself! Below you'll find the types of entities analyzed and our process for determining a searcher's intent.

Entities Analyzed

Upon clicking "Analyze", you will see that entities are highlighted. The model is trained to identify the following entities:

- Generic people (ex: CEO, user, client)

- Specific people (ex: Howard, Steve, Jill)

- Relative location (ex: near me)

- Street roads

- Cities

- States or regions

- Countries

- Postal codes

- Type of location (ex: office, hospital, testing center)

- Date or time

- Institution or brand (ex: Yext)

- Physical product (ex: iPhone)

- Intangible product (ex: Yext Answers)

- Healthcare procedure (ex: hip replacement)

- Healthcare condition (ex: covid-19)

- Healthcare speciality (ex: cardiologist)

- Quantity or range

- Events (ex: CES, WWDC, Google I/O)

- Routine business operation (ex: tire replacement, open account, change of address)

- Fees, limits, and charges

- Problem (ex: my computer won't turn on)

To give credit where credit is due: the Yext NER algorithm builds upon the BERT algorithm originally developed by Google back in 2018.

Process

Much like a human has memory, our machine learning model has built up an extremely large database of words. It further stores how all the words relate to each other. For example, the words "apple" and "red" are in the database, and the model has learned that they are associated.

The model is much more powerful than just what is in the database of words. Much like a human can deal with words they have not seen before, our algorithm can too. It uses the same techniques we learned back in grade school:

- Word parts (breaking a word up into its key parts)

- Context clues (looking at surrounding words)

Word Parts

If the algorithm sees a word it does not recognize, it breaks it down into its key components. For example, if it does not recognize the word "Interdisciplinary", it will break it up into "inter", "disciplin", and "ary". It can then search its database for these key components to infer a base understanding of the word. This base understanding is later improved through context clues.

Context Clues

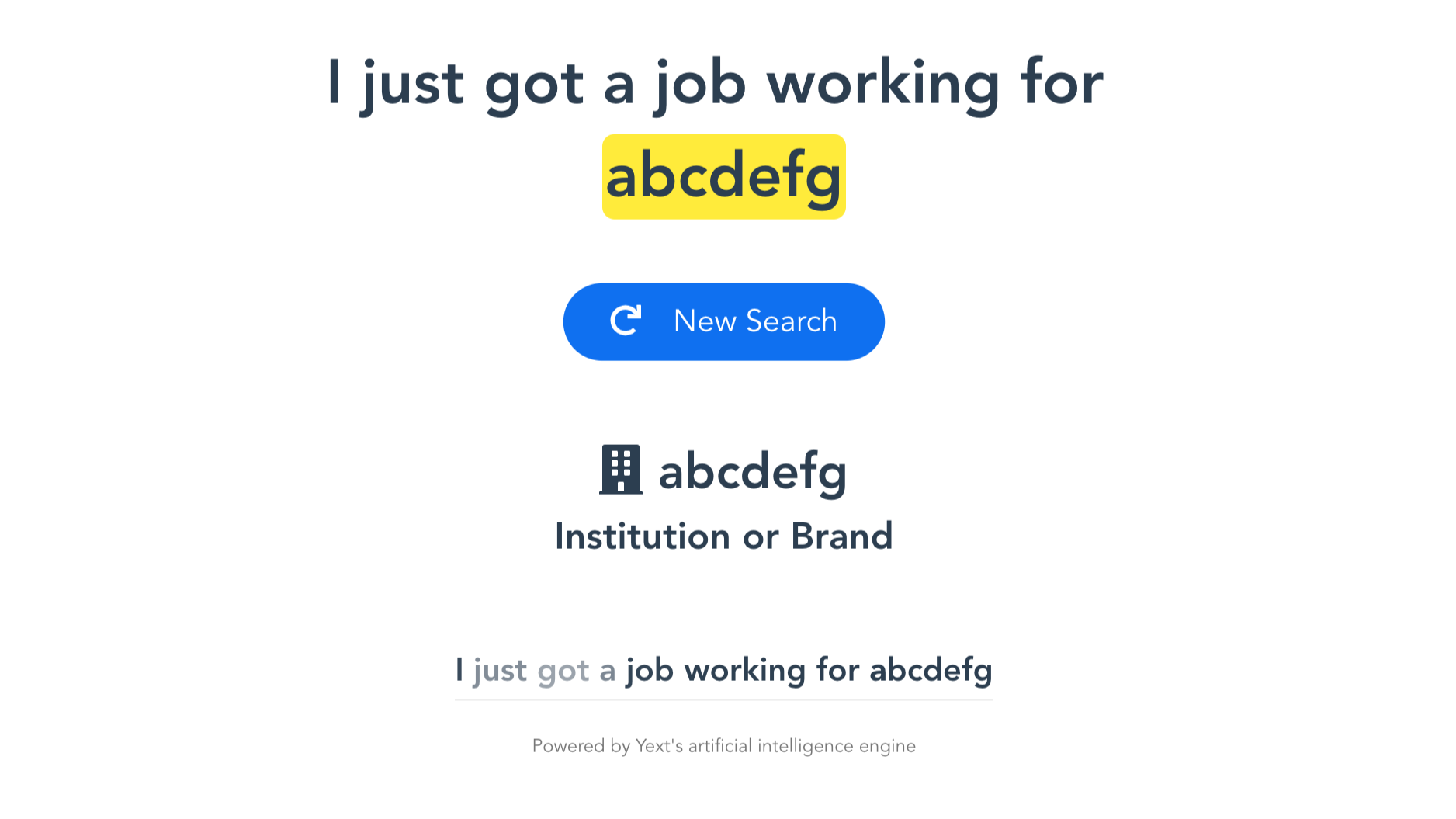

Our algorithm can also look at surrounding words to help infer meaning. For example, if we enter the query "I just got a job working for abcdefg", we know that "abcdefg" is not a word stored in the database, and it certainly isn't a well known company. Despite this, the algorithm is able to correctly identify it as an "Institution or Brand". It did this by looking at surrounding words.

Because we thought it would be interesting, we actually track where the algorithm looks for context clues and how important it thinks each clue is. Looking below the "Institution or Brand" label, you will see the query is presented again, but this time with words shaded in gray. The darker a word, the more important the algorithm found it as a clue. We can see that in determining that "abcdefg" was a company, it found "job working for ..." relevant, while not paying much attention to "just got a". This is in line with how we would expect a human to behave. The algorithm was able to recognize that in the past, when it saw "job working for …", the name of a company followed.

Try it out!

If you want to see for yourself how the Answers algorithm works, head to our Understanding the Answers Algorithm site. If you have any additional questions about Answers or the algorithm feel free to stop by the Hitchhikers Community or attend weekly Office Hours.

All information above was last updated and checked on January 8th, 2020.