Key Terms and Introduction to How Search Engines Work | Yext Hitchhikers Platform

What You’ll Learn

In this section, you will learn:

- Key terms related to Search Engine Optimization (SEO)

- How search engines work

- The three major steps that determine how/which results are ranked in search

Key Terms

An effective Search Engine Optimization strategy is a critical component of being able to answer the complex questions consumers are asking every day. This unit will define a few key terms we will discuss throughout this module and provide an introduction of how search engines work.

Search Engine Optimization (SEO) - Search engine optimization is the process of increasing the quality and quantity of website traffic by increasing the visibility of a website or a web page to users of a web search engine.

Schema.org Markup (also referred to as Schema/Structured Data) - Schema.org is a joint effort (through collaboration of the search engines), to improve the web by creating a structured data markup schema. Web developers can use this universal vocabulary by embedding markup in the HTML of a page to communicate to search engines universally. On-page markup helps search engines understand the information on web pages and provide richer search results.

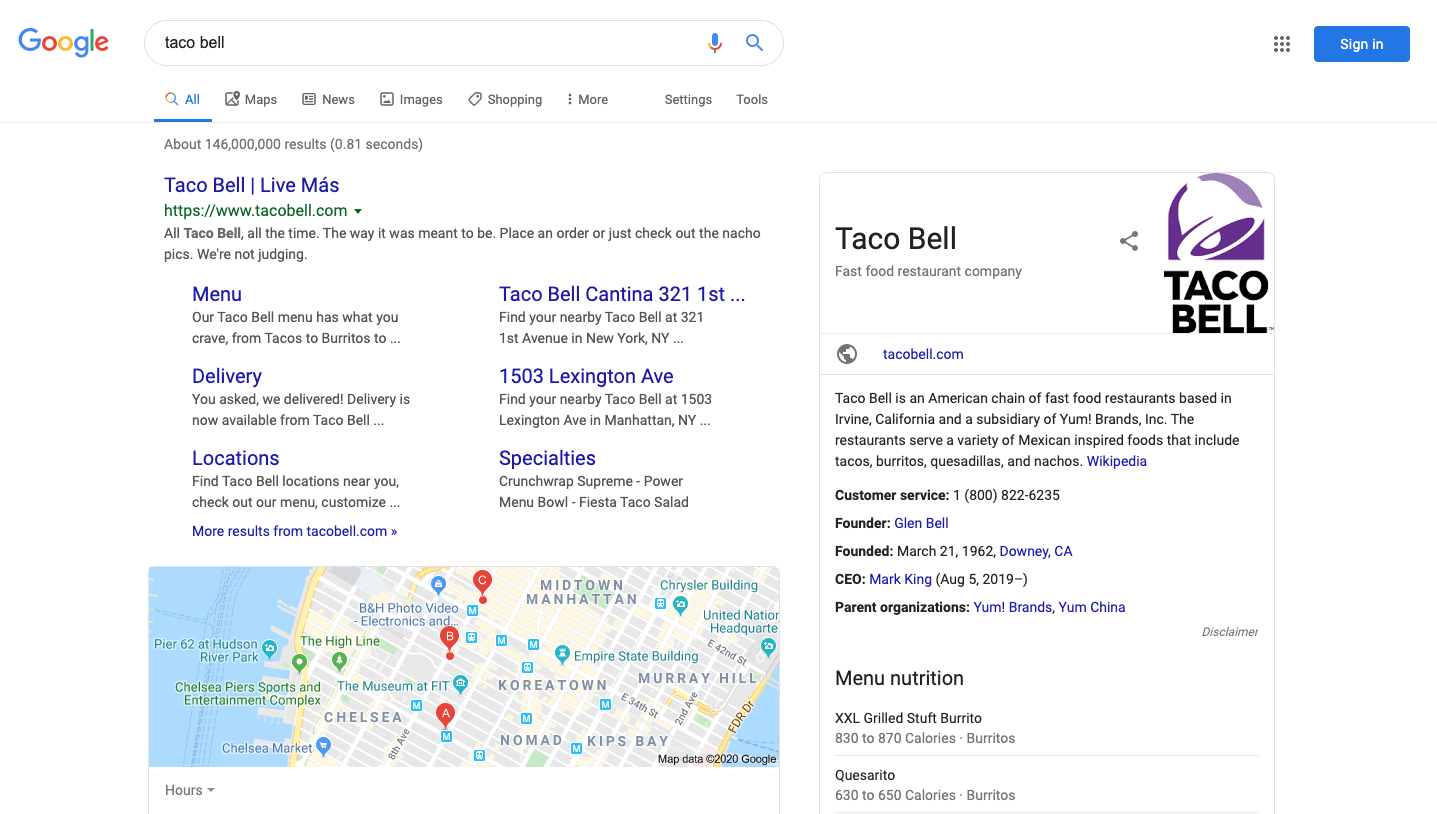

Search Engine Results Page (SERP) - The pages displayed by search engines in response to a query by a searcher. These can include links, listings, or other rich content.

How Do Search Engines Work?

Every time you search, there are thousands, sometimes millions, of web pages with helpful information. How Google figures out which results to show starts long before you even type, and is guided by a commitment to you to provide the best information. Search engines discover, understand, and organize content on the internet. Their goal is to offer the most relevant results to the questions searchers are asking. This process takes three steps:

Crawling

This is the process where search engine robots find documents (unique URLs) on the Internet and catalog the code & content on each URL.

You might see these ‘robots’ referred to as spiders or web crawlers. In order to find new links, the crawlers will organically follow links from page to page, just as a normal user might.

As you can imagine, this process would take virtually forever to index the entire public Internet. To be most effective, the crawling process begins by finding a robots.txt file which tells search engine crawlers which pages or files the crawler can or can’t request from your site. As the crawlers visit these websites, they use links on those sites to discover other pages. The software pays special attention to new sites, changes to existing sites and dead links. Computer programs determine which sites to crawl, how often, and how many pages to fetch from each site.

We recommend registering your sites directly with Google Search Console and Bing Webmaster Tools and provide the sitemap to facilitate the crawling process.

Indexing

Crawled data is then stored in the search engine’s index. In order for results to be returned in search, the content must first be indexed.

When crawlers find a webpage, Google’s systems render the content of the page just as a browser does. Google makes note of key signals — from keywords to website freshness — and keeps track of it all in the Search index.

The Google Search index contains hundreds of billions of web pages and is well over 100,000,000 gigabytes in size. It’s like the index in the back of a book — with an entry for every entity seen on every webpage. When Google indexes a web page (URL), it adds entries for all of the entities it contains.

Ranking

Relevant content in the index is then returned based on a user’s query, with the most relevant and trustworthy content appearing higher on the search engine results page (SERP), i.e. getting a higher rank.

Google sorts through hundreds of billions of webpages in their search index to find the most relevant, useful results in a fraction of a second, and present them in a way that helps you find what you’re looking for. These ranking systems are made up of not one, but hundreds of algorithms.

To give you the most useful information, search engines put together a candidate set based on many factors, including the words of your query, relevance and meaning of the query.

Their algorithms then apply weight to each factor depending on a number of undisclosed criteria that may include expertise of sources, usability of pages, your location, and settings. An example of this could be the nature of your query—for example, the freshness of the content plays a bigger role in answering questions about current news topics than it does about dictionary definitions. These factors establish the result ranking for that person at that time.

On top of that, secondary systems may personalise, remove bias, and more. Over time, the search engine learns from the results it provides and shapes future queries.

What are the main actions search engines perform? (Select all that apply)

True or False: A search engine results page can include links, listings, or other rich content

Which step in a search engine's process includes robots finding unique URLs around the web and cataloging the code?

High five! ✋