Search Algorithms | Yext Hitchhikers Platform

Overview

One of the key advantages of Yext Search is its multi-algorithm strategy. Search deploys multiple algorithms in production that can either work in isolation or interact with each other, depending on what the user configures.

Our in-house data science and ML operations teams have created and fine-tuned advanced ML models that are regularly trained on large datasets of human-labeled data to ensure high-quality, highly-accurate outputs.

The goal of this document is to explain each algorithm used in production today, how they work, and how they interact with each other.

Our Algorithms

builtin.location) are only available on the Advanced Search tier. Check out the

Search Tiers

reference doc to learn more about the features that are available in each tier.

Once a user runs a query, Search determines which algorithm(s) to apply based on which searchable field type(s) are configured. The searchable fields algorithms are:

If you don’t already have an account set up, try out the algorithm demos to get a sense of how they work!

doc.Score

The Search algorithm uses a concept of a document ‘score’, doc.score, which is simply the number of token matches in the result text.

Search uses doc.score in three (3) main junctures:

| Algorithm | Purpose |

|---|---|

| Keyword Search | To calculate the BM-25 score |

| Phrase Match | To represent token matches. If there is a Phrase Match, an arbitrarily large boost is applied to the result |

| Semantic Search | To represent token matches. If a result exceeds the similarity threshold, an arbitrarily large boost is applied to the result |

Keyword Search

The most basic algorithm offered today is Keyword Search, which relies on keyword matches between the query and the result. When you enable Keyword Search on a field, Search will return results whenever there is a match between a query and field token, unless they are stop words. When ranking results, Search considers the relative importance of the token with respect to all of the content in your platform.

Keyword Search uses an algorithm called BM-25 , which works on the concept of TF-IDF, or term frequency-inverse document frequency. Put simply, it measures how important a word/term is to a result field, for each result in a result set. The result with the most occurrences of ‘important’ terms is deemed the most relevant.

- TF, or term frequency, works by looking at the frequency of a specific term relative to the field value string. This is calculated by counting the number of times a term appears in a string and dividing it by the total number of words in that string.

- Stop words are words that appear all the time and generally contain no meaning to the searcher, like ‘a’, ‘the’, and ‘of’. These are only used as tiebreakers in scenarios where two results adjusted for stop words are the same. Search maintains a built-in list of these, and users can define additional stop words in their configuration specific to their business.

- However, since Keyword Search is entirely based on token matches, stop words are used for token matching (and thus may return results) when no non-stop words are present.

- IDF, or inverse document frequency, applies weighting to words such that common terms have a minimized weight, and rarer terms have a greater impact.

The individual TF and IDF scores are multiplied together to arrive at a TF-IDF score. The higher the score, the more relevant that word is to the document. Scores are calculated uniquely for each experience, because terms that are rare in one experience may be common in another, and vice versa.

Phrase Match

Phrase Match is a variation of Keyword Search that only returns a given result for a query when the entire field value appears in the search query. Rather than calculating relevance by the weighted frequency of a term or set of terms amongst all the words in a document, Phrase Match will only consider a document relevant if all terms defined in the query appear in the document as a continuous phrase.

Let’s take a look at a few examples below:

Query: When is the super bowl?

| Field Value | Keyword Match | Phrase Match |

|---|---|---|

| Super Bowl | Yes | Yes |

| Super Duper Bowl | Yes | No |

| Rose Bowl | Yes | No |

| NBA Finals | No | No |

Phrase Match works well with keywords fields on entities, or in any other scenario where you’d like to use Keyword Search, but do not want noisy keyword matches on parts of a field value. Phrase Match applies a boost on the result’s doc.score, which is discussed in more detail in Phrase Match + Keyword Search .

Semantic Search

Semantic Search incorporates a result’s similarity to the query’s underlying meaning.

There are often many different ways to phrase the same idea. Take a FAQ result for ‘How do I return an order?’ and some examples of query phrasing:

FAQ: How do I return an order?

| Example Queries | Cosine Similarity | Returned by Keyword Search? | Returned by Semantic Search? |

|---|---|---|---|

| How do I return an order? | 1 | Yes | Yes |

| How can I exchange my pair of shoes? | 0.85 | No | Yes |

| Regret my purchase and want to get rid of it | 0.30 | No | Yes |

| Where to get shoes on sale | 0.25 | No | No |

| You got games on your phone? | 0.10 | No | No |

| Movie theater near me | -0.03 | No | No |

As humans, we intuitively know that the first three queries should all return the FAQ result above. But the Keyword Search algorithm might not know to return the right result because not all of these queries contain the same words as the result, even if they mean the same thing.

By relying on semantic similarity rather than simple keyword matches, semantic search is able to understand that the first three queries above have a similar meaning to the question being asked.

Embedding Model

Semantic Search measures similarity by using a process called embedding. Embeddings as a search technology have been around for a while, but Google was the first to calculate these using transformer neural networks when they released BERT . Our Data Science team built our own embedding model on top of MPNet, which is similar to BERT.

When a user enters a query, the model embeds the query and a result. This transforms them into points in high-dimensional vector space. Here, a query and result that mean the same thing will be close together, even if they don’t contain any of the same words. Instead of returning results purely based on overlapping keywords, semantic search looks for results that are the closest in meaning to the user’s query.

Token Matching and Similarity Thresholds

Though the main value proposition of semantic search lies in its ability to measure semantic similarity, the algorithm doesn’t outright discard token matches - just because they aren’t always the best way of indicating meaning doesn’t mean they shouldn’t be used at all!

The semantic return threshold for a result is 0.3. Any result above this is considered semantically similar enough to be returned organically by semantic search. While stop words may prevent token matching, results with stop words may still be returned if they meet this semantic similarity threshold.

When semantic similarity is low, there isn’t a strong signal from the embedding model, so it makes more sense to just look at the number of token matches. This works best for short, one-to-two-word queries that don’t contain much semantic meaning like ‘blue hat’ or ‘best shoes’. Thus, any result below the semantic return threshold will still be returned if there is a Keyword Search match, but if not, will not be returned at all.

Conversely, if semantic similarity is high, the embedding model is sending a strong signal that the result is very relevant. The algorithm bakes in an additional layer of logic, where if the result meets or exceeds a certain similarity threshold, it gets boosted straight to the top regardless of the number of token matches. The similarity boost threshold is 0.65.

Here is an explanation of the logic using code:

{

similarity = *//the similarity function*

matchedTokens = doc.score *// This comes from our constant score per token match approach

boostThreshold = 0.65 //The similarity boost threshold

if (similarity > boostThreshold) {

return 1000 + matchedTokens + similarity;

}

return matchedTokens + similarity;

}Here is an example of how it works in practice:

Query: are bonita fish big?

| Result | Matched Tokens | Similarity | Rank |

|---|---|---|---|

| How large are bonita fish? | 2 | 0.9 | 1 |

| What’s a skipjack tuna? | 0 | 0.75 | 2 |

| Are bonita fish carnivorous? | 2 | 0.5 | 3 |

| Are bluefin tuna big? | 1 | 0.4 | 4 |

| Are catfish big? | 1 | 0.35 | 5 |

In this example, “What’s a skipjack tuna?” was bumped all the way up to #2 because, although it has no matched tokens, its similarity is above the similarity threshold of 0.65, so it gets bumped up to the top. Note that “How large are bonita fish?” is even more similar so it still gets the #1 position.

Inferred Filter

The idea behind inferred filters is to literally infer filters from the query, such as ‘color == blue’, or ‘location == New York’.

The inferred Filter algorithm behaves differently depending on the type of field it is applied on. The three (3) main categories of inferred Filter field behavior are:

- Normal Field Value (i.e. any field not

builtin.locationorbuiltin.entityType) - Location (

builtin.location) - Entity Type (

builtin.entityType)

Inferred Filters on Normal Fields

For a normal searchable field with inferred filtering configured, the algorithm will look for a field value’s tokens in the query (i.e. ‘blue hat’ or ‘doctors who accept blue cross blue shield’) and apply those tokens as a strict filter.

"appliedQueryFilters": [

{

"displayKey": "Color",

"displayValue": "Blue",

"filter": {

"color": {

"$eq": "Blue"

}

},

"type": "FIELD_VALUE"

}

],If the potential inferred filter field has two or more tokens, there needs to be a match with at least two tokens from the query for the inferred filter to be applied. Consider an inferred filter field value ‘Capital One’. In order for an inferred filter to be applied to this field value, the searcher’s query must contain the tokens ‘capital’ and ‘one’. This is because if someone searches ‘Capital’ (ambiguous) or ‘Capital Grille’ (a restaurant), applying an inferred filter match on ‘Capital One’ because of one token match on ‘Capital’ does not necessarily match the searcher’s intent.

Here are a couple of examples of queries and filter values that would and would not match:

| Query | Inferred Filter Match | No Inferred Filter Match |

|---|---|---|

| Capital | Capital | Capital Grille Washington Capitals Capital One Capitol Hill |

| Samsung Galaxy | Samsung Galaxy Samsung Galaxy S Samsung Galaxy S3 |

Samsung phone Samsung smartphone Samsung zflip |

If there are multiple matches on an inferred filter field of two or more tokens, the best match is used as the tiebreaker. The best match is determined by the highest percentage of tokens that are a match. Let’s assume that ‘blue cross’ and ‘blue cross blue shield’ are both candidate inferred Filter values for the ‘Insurance’ field. If the query is ‘blue cross’, the inferred filter will be applied on ‘blue cross’ because 2⁄2 tokens matched, as opposed to 2⁄4 for ‘blue cross blue shield’.

‘Using Up’ Tokens

When a token triggers an inferred filter, that token gets ‘used up’. This means that you can’t apply inferred filter and another algorithm, like keyword search, on the same token. Using the example above with ‘blue hats’, if the token ‘blue’ triggers an inferred filter, any other algorithms applied to that searchable field can only search on the token ‘hats’. Inferred filters are always applied first, over other searchable field algorithms.

If there are no additional matches from Keyword Search or the rest of the algorithms applied to that searchable field, or if there are no other algorithms aside from inferred filter enabled, Search shows all results that match the inferred filter(s). Continuing with the ‘blue hat’ example:

Inferred Filter: color == blue

| Scenario | Action |

|---|---|

| Keyword Search match for ‘hat’ | Return all hat entities that have color == blue |

| No Keyword Search match for ‘hat’ | Return all entities where color == blue |

| No other algorithm configured | Return all entities where color == blue |

Inferred Filters on Location Fields

The special inferred Filter behavior for location fields only works on builtin.location. Address subfields such as address.city and address.region are treated like regular fields in inferred filtering. We encourage using builtin.location for location search, to take full advantage of our NER model and Mapbox functionality.

When a user inputs a location search with inferred filter enabled on builtin.location, our Named Entity Recognition (NER) model will detect any key location-specific tokens in the query, such as the postal code ‘10011’ or ‘Central Park New York’, based on the user’s proximity bias and the prominence of the place. Then, we send those key tokens to a third-party service called

Mapbox

to find the central latitude and longitude of that place.

If NER is not supported for a particular language, we use an n-grams approach up to 3-grams. This sends all the combinations of up to three consecutive tokens to Mapbox, which then chooses the best one. For instance, for ‘new york city office’ we’d try:

- N=1: ‘new’, ‘york’, ‘city’, ‘office’

- N=2: ‘new york’, ‘york city’, ‘city office’

- N=3: ‘new york city’, ‘york city office’

NER Location Detection is currently supported in English, French, German, Italian, and Spanish.

Only these key location tokens then get sent to Mapbox, where we choose the best result based on what Mapbox finds. Note that if you have a bounding box configured, we’ll only look for places within that bounding box, and we only search for places whose country is defined in the countryRestrictions setting of the config (which defaults to the US).

Mapbox also uses a combination of a proximity bias and place prominence to determine the order of places returned to us. When we pass the user’s lat/long to add a proximity bias to the places returned, Mapbox itself then decides in what order to return the places based on the user’s proximity to the place AND the prominence of the place. So for example, if you were to search for “Paris”, “Paris, France” would probably return as the top Mapbox result unless you were fairly close to “Paris, Texas”, since Paris, France is very prominent. However, for a bunch of cities with the same name and similar prominence, the proximity may play a larger role. Unfortunately, Search doesn’t have any insight into the weights of prominence and proximity that Mapbox uses for their calculations.

Once we have the Mapbox output, we then apply some additional logic where we prioritize places that actually have entity matches over ones that don’t. For instance, if the user searches ‘Arlington’ and Mapbox finds ‘Arlington, VA’ and ‘Arlington, TX’ and there are only Knowledge Graph entities for ‘Arlington, VA’, we eliminate ‘Arlington, TX’ as a candidate inferred Filter and use ‘Arlington, VA’.

Keep in mind that if a user included the state, such that the query was “Arlington Virginia” or “Arlington TX”, our system would have enough context to decide which Arlington to use.

Once we’ve identified a place, we then take the central latitude and longitude of that location, which along with the boundingBox object in the API response, calculates an ‘approximate radius’ of the location to search for entities within:

- If the approximate radius is between 0 and 25 miles, we search within a 25 mile radius

- If the approximate radius is between 25 and 50 miles, we search within a 50 mile radius

- If the approximate radius is greater than 50 miles, we use the approximate radius

The approximate radius behavior is user-configurable by setting the minLocationRadius property in the configuration JSON to the minimum radius you’d like to search within, in meters.

Once a place, its central lat/long, and search radius have been determined, all entities that do not fall within that radius get thrown out, and we filter the search results to all existing places that fall within the search radius. If there are no place entities within that radius, nothing gets returned.

API Response

As an example, here is what an inferred filter applied on ‘Brooklyn’ would look like in the API response:

"appliedQueryFilters": [

{

"displayKey": "Location",

"displayValue": "Brooklyn",

"filter": {

"builtin.location": {

"$eq": "P-locality.66915052"

}

},

"type": "PLACE",

"details": {

"latitude": 40.652601,

"longitude": -73.949721,

"placeName": "Brooklyn, New York, United States",

"featureTypes": [

"locality"

],

"boundingBox": {

"minLatitude": 40.566161483,

"minLongitude": -74.042411963,

"maxLatitude": 40.739446,

"maxLongitude": -73.833365

}

}

},

]Inferred Filters on Entity Type Fields

Each vertical you create has a builtin.entityType field enabled for inferred Filtering by default. When you create an entity type, under the hood we’re attaching some ‘fields’ to that entity that corresponds to the name of that entity type. If you create a ‘Location’ entity, for instance, we will generate builtin.entityType fields for ‘Location’ and ‘Locations’ (singular and plural forms of the entity name, for languages that make that distinction).

When a user runs a search, we apply an inferred Filter on builtin.entityType to get the proper entity types for each vertical. So if a user searches for ‘Locations near me’, builtin.entityType will limit the results to Location entities.

Special Cases

“Open Now”

If someone searches ‘open now’ (or its equivalent in other languages) and builtin.hours is configured for inferred filtering, then we filter to locations that are currently open.

“Near Me”

This functionality only works for builtin.location. In this case, we prompt the user for HTML5 location (the popup you get in your browser where it prompts you that ‘x wants to know your location’). Failing that, we use the searcher’s IP address to geolocate. Mapbox is not involved at all.

Static Filter

Static Filters are technically not an algorithm that relies on a pre-trained AI model, but they’re worth calling out here because they’re commonly used to manipulate search results. When applied, Static Filters narrow down a result set based on the filter conditions set by the user.

Static Filters are generated on the client-side, meaning they are pre-configured on the front-end and passed into the Search API. This also means Static Filters appear independently of the content of a user query. When a user queries an experience, any Static Filter conditions are passed as an additional query parameter, denoted by 'filters=' in the URL.

Static Filter behavior is pretty straightforward - it simply applies a filter on your results given specified conditions.

| Query | Pre-Filter Result | Static Filter(s) | Post-Filter Result |

|---|---|---|---|

| shoes | All shoes | color == blue | Shoes that are blue |

| Who are Yext’s customers? | All Yext customers | product == search industry == healthcare |

Healthcare customers with Search |

Document Search

The purpose of Document Search is to take dense, long-form documents and extract relevant passages of text based on the query, also known as snippeting.

Today, Document Search is able to produce featured and inline snippets, but does so using the same keyword-based algorithm used in Keyword Search, rather than something like semantic vector search, for the time being.

A Featured Snippet is a type of Direct Answer that when given a question, and a span of text in a searchable document that exactly answers the question, serves the text as a direct answer like so:

Behind the scenes, Document Search will return the results using the BM-25 score (as discussed in Keyword Search ), then Search will apply the Extractive QA (Question/Answering) model on the top five results. As the name suggests, our Extractive QA model is a natural language algorithm used to extract the most relevant span of text from a paragraph.

If there is an exact answer to the question, as seen above, Search returns that answer as a Featured Snippet at the top of the results. If there isn’t one, no Featured Snippet is returned and the rest of the results are returned normally.

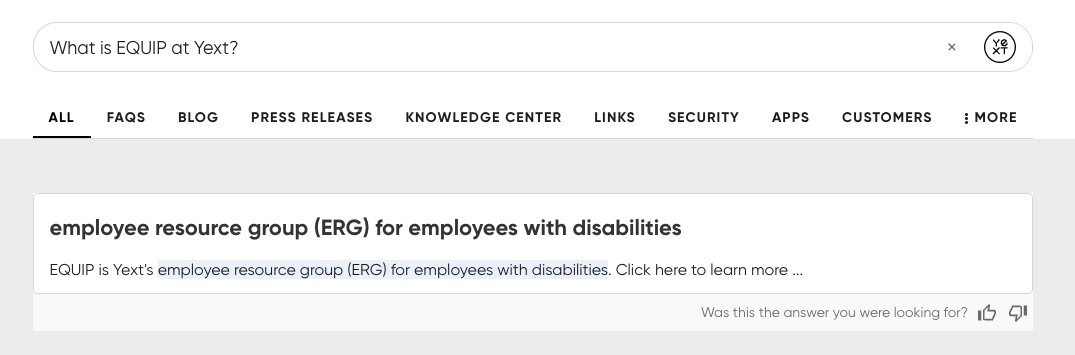

Not all queries will have an exact answer. For instance, if someone searches ‘Yext EQUIP ERG’, a direct answer isn’t returned because this isn’t really a question.

An Inline Snippet is used when there isn’t a direct question to answer, but for each entity, it still makes sense to show the span of text that is most relevant to the query.

A key difference is that there is only one featured snippet per query, but one inline snippet per entity. To produce these, we look for the ~250 character section of the body text containing the most token matches.

How Our Algorithms Interact

This section will discuss how the different searchable fields algorithms interact with each other.

Phrase Match + Keyword Search

If Phrase Match and Keyword Search are configured on the same field, both Phrase Match and Keyword Search matches get returned, but Phrase Match applies a boost on the result.

We calculate and rank results based on the score-per-token-match doc.score, which is where any Phrase Match boost is applied. The doc.score is also used as the Keyword Search output when combining Keyword Search and semantic search, described in the next section.

Query: ‘nike sportswear shorts’

Field: name (Phrase Match, Keyword Search)

| Rank | Result | Keyword Search Matches | Phrase Match |

|---|---|---|---|

| 1 | Nike Sportswear Shorts | 3 | Yes |

| 2 | Blue Sportswear Shorts | 2 | No |

| 3 | Flannel Drawstring Shorts | 1 | No |

The first result, Nike Sportswear Shorts, contains a Phrase Match with the query, so it gets a boost. It also has the most keyword matches. The other results do not have a Phrase Match, so they are subsequently ranked based on the number of keyword matches with the query.

Keyword Search + Semantic Search

If plain Keyword Search and Semantic Search are configured on the same field, the algorithm will behave as if plain Keyword Search wasn’t even applied. It will just perform Semantic Search on that field, as described in Semantic Search .

One thing to be wary of is configuring Keyword Search and Semantic Search on separate fields. Recall how Semantic Search evaluates on both keyword matches and semantic vector distance. If enabled on different fields, the keywords from the fields with Keyword Search will also be considered in the Semantic Search keyword matching.

This negatively impacts search quality, as you can imagine introducing keywords from an address field in semantic search on a name field would likely yield some noisy keyword matches.

Inferred Filter + Others

Inferred Filter is the ‘end all be all’ of the searchable fields algorithms.

What we mean by that is whenever inferred Filter is configured with other algorithms, either on the same field or on different fields, the inferred Filter is always applied first and applies a strict filter value on the results set. For instance, if there is an inferred Filter match on the ‘color’ field for ‘blue’, we only return results that have ‘blue’ as a color. Then, any other algorithms in use will determine the ranking of items within the inferred-filtered results.

Additionally, any tokens that are used by the inferred Filter to return a result are ineligible for other algorithms to apply on. For instance, if there is an inferred Filter match on the ‘color’ field for ‘blue’, ‘blue’ can’t also be evaluated for Keyword Search. This is discussed in detail in ‘Using Up’ Tokens .

If inferred Filter couldn’t find any filters to apply, the algorithm will “fall back” to Keyword Search or whatever other algorithms are configured on that field.

If you don’t want a true filter, you could use Phrase Match to apply a similar level of strictness to your result matching. One thing to consider with this is that while inferred Filter can match on partial field values, a query must contain an entire Phrase Match field value in order for it to be applied.